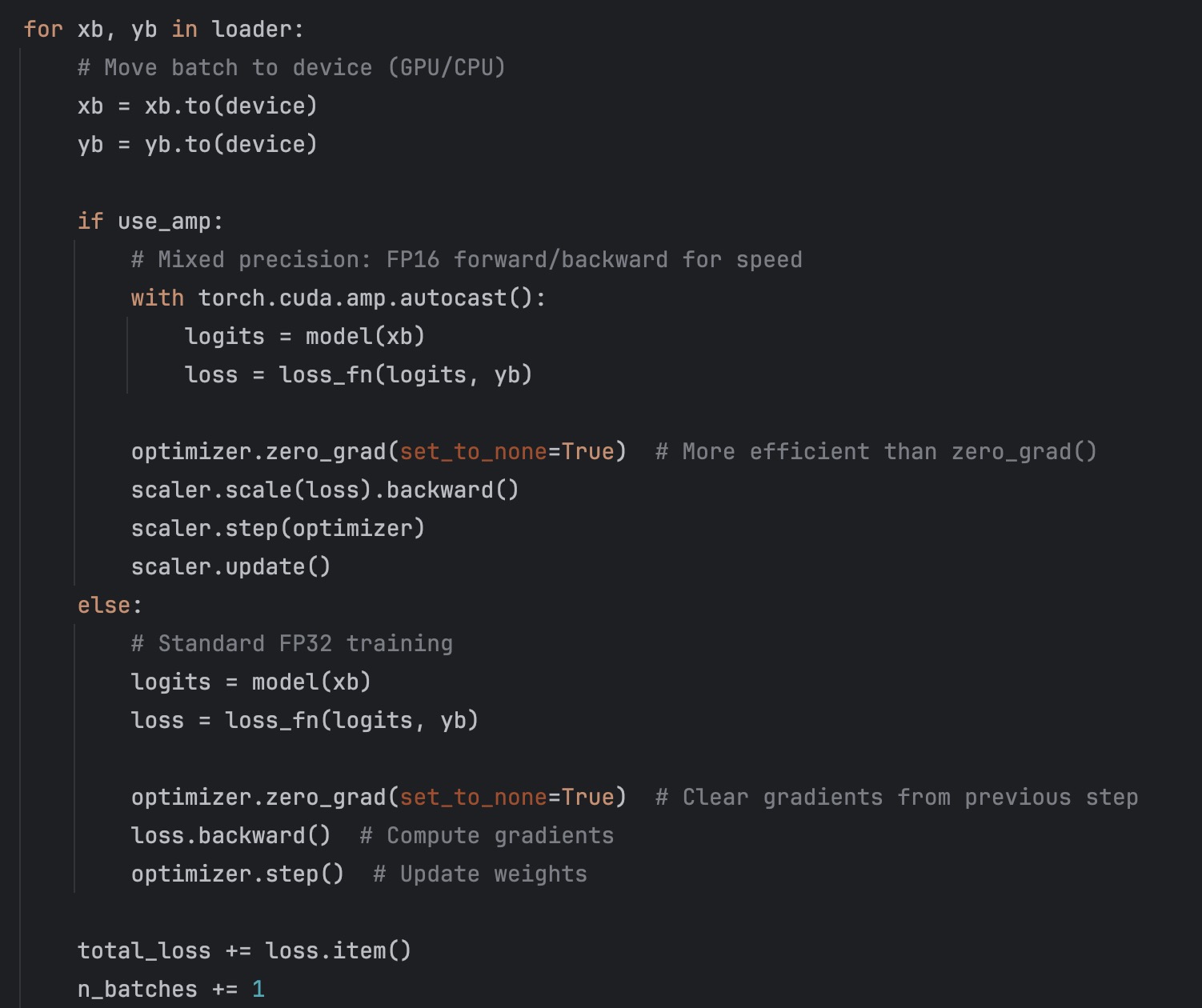

PyTorch Training Loop: A Production-Ready Template for AI Engineers

You can train almost anything in PyTorch with seven lines of code. The problem? Those seven lines are correct but incomplete for real-world engineering. You need mini-batches, validation, device ha...