Sentinel-AI - Designing a Real-Time, Scalable AI Newsfeed

“What would a production-grade AI cluster look like if built from scratch for scale, resilience, and lightning-fast insights?”

Sentinel-AI is my answer.

Repo 👉 github.com/gsantopaolo/sentinel-AI

This project implements a real-time newsfeed platform that can aggregate, filter, store, and rank events from any source you choose—RSS, REST APIs, webhooks, internal logs, you name it. The main goal is to showcase how an AI-centric micro-service cluster should be architected to survive production traffic at millions-of-users scale.

Core Concepts

🔗 Dynamic Ingestion

Subscribe to any data feed (RSS, APIs, webhooks, etc.) and ingest events the moment they happen.

🧹 Smart Filtering

Mix rule-based heuristics with pluggable ML/LLM models to keep only the content your audience cares about.

⚖️ Deterministic Ranking

Weight “importance × recency” with a configurable algorithm and instantly re-rank via open APIs.

Bird’s-Eye Overview

| Service | What it Does | Publishes | Persists To |

|---|---|---|---|

| Scheduler | Fires poll messages on schedule | poll.source | — |

| Connector | Fetches each source & normalises events | raw.events | — |

| Filter | Relevance filter + embeddings | filtered.events | Qdrant |

| Ranker | Scores events (importance×time) | ranked.events | Qdrant |

| Inspector | Flags anomalies / fake news | — | Qdrant |

| API | Ingest, retrieve, re-rank, CRUD sources | raw.events, new/removed.source | Postgres, Qdrant |

| Web | React dashboard ↔️ API | — | — |

| Guardian | Monitors NATS DLQ & alerts | notifications | — |

Why It Scales to Millions

- Micro-services: Each responsibility is isolated, stateless, and horizontally scalable.

- NATS JetStream: Ultra-low-latency pub/sub with back-pressure and dead-letter queues baked in.

- Vector DB (Qdrant): Fast semantic search and payload updates; sharded for linear throughput growth.

- Kubernetes-ready: Health probes, autoscaling, and rolling upgrades out of the box.

- Async Python: Every network-bound task uses

asyncio, squeezing maximum concurrency per pod. - Deterministic failover: DLQ + Guardian means no silent data loss—ever.

- Docker-Compose for quick local spins, Helm chart (road-map) for prod clusters.

From “Smart” to Agentic 🤖

Today, Sentinel-AI already leverages LLMs for embeddings and optional relevance checks.

Tomorrow, each micro-service can be converted into an agent with its own:

- Goal (e.g., “maintain perfect source coverage”),

- Tools (HTTP client, vector search, scoring algorithms),

- Memory (Qdrant / Postgres),

- Self-evaluation loop.

A starter blueprint lives under src/agentic.

Swapping the current functions for agentic planners is mostly a wiring exercise—no major rewrite required.

Try It in Two Commands

1

2

3

4

5

git clone https://github.com/gsantopaolo/sentinel-AI.git?utm_source=genmind.ch

cd sentinel-AI

sudo deployment/start.sh # brings up the whole stack

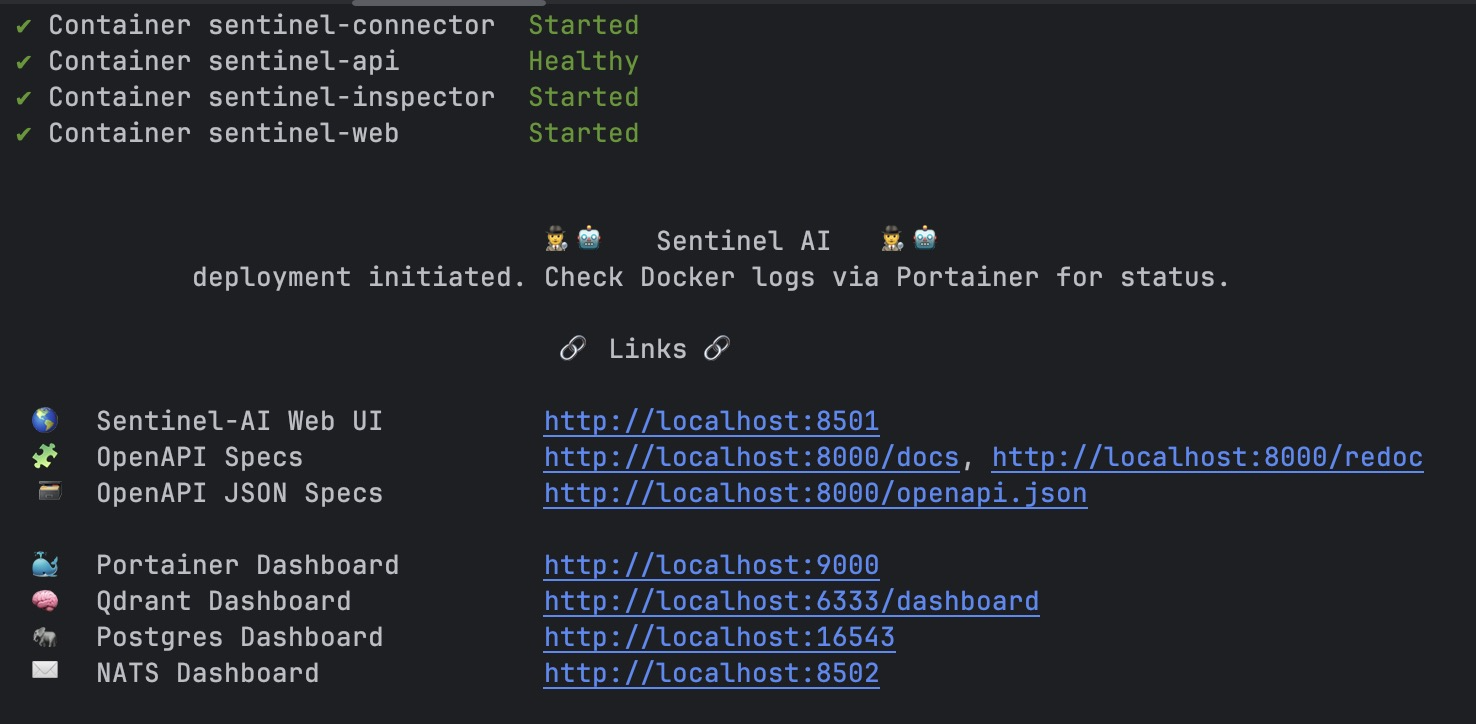

Sentinel-AI after deployment/start.sh

Sentinel-AI after deployment/start.sh

Open http://localhost:9000 (Portainer) to watch containers boot

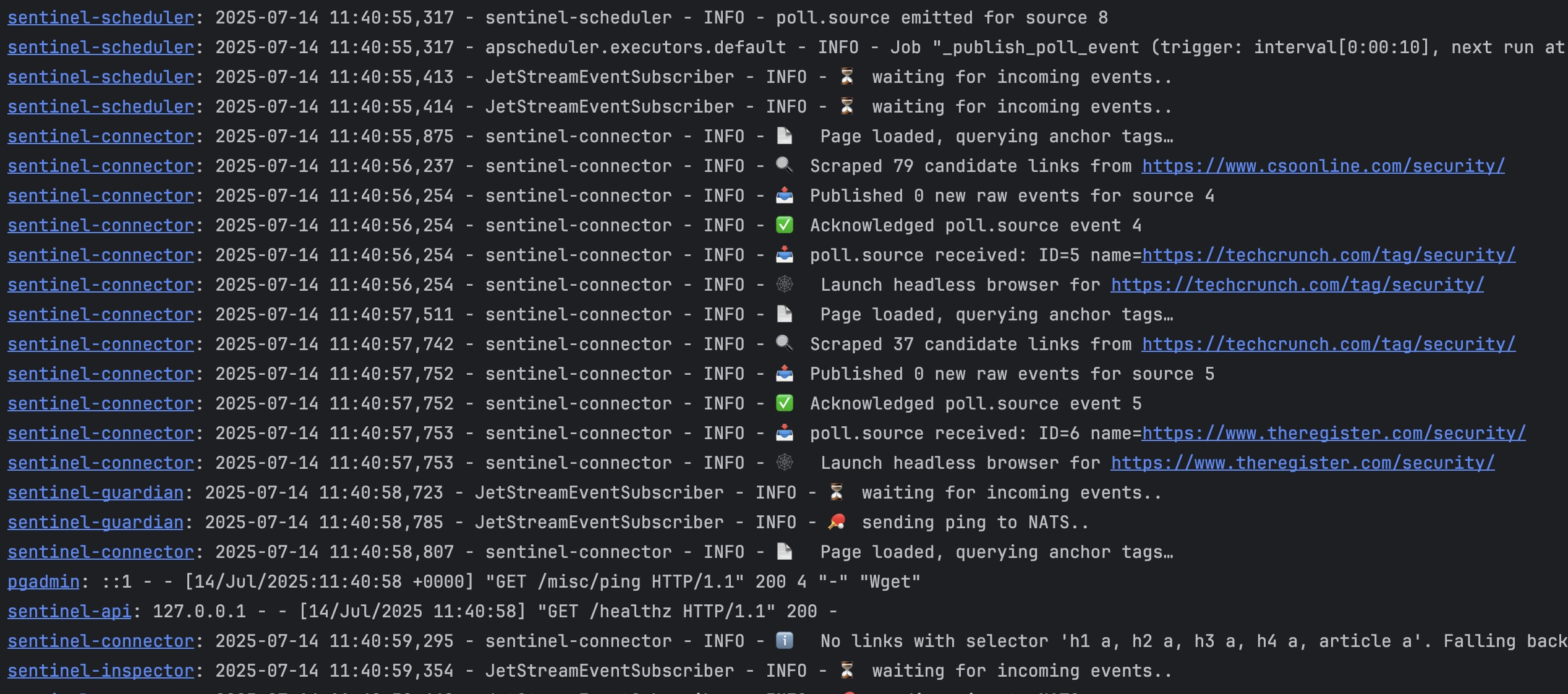

Sentinel-AI logs

Sentinel-AI logs

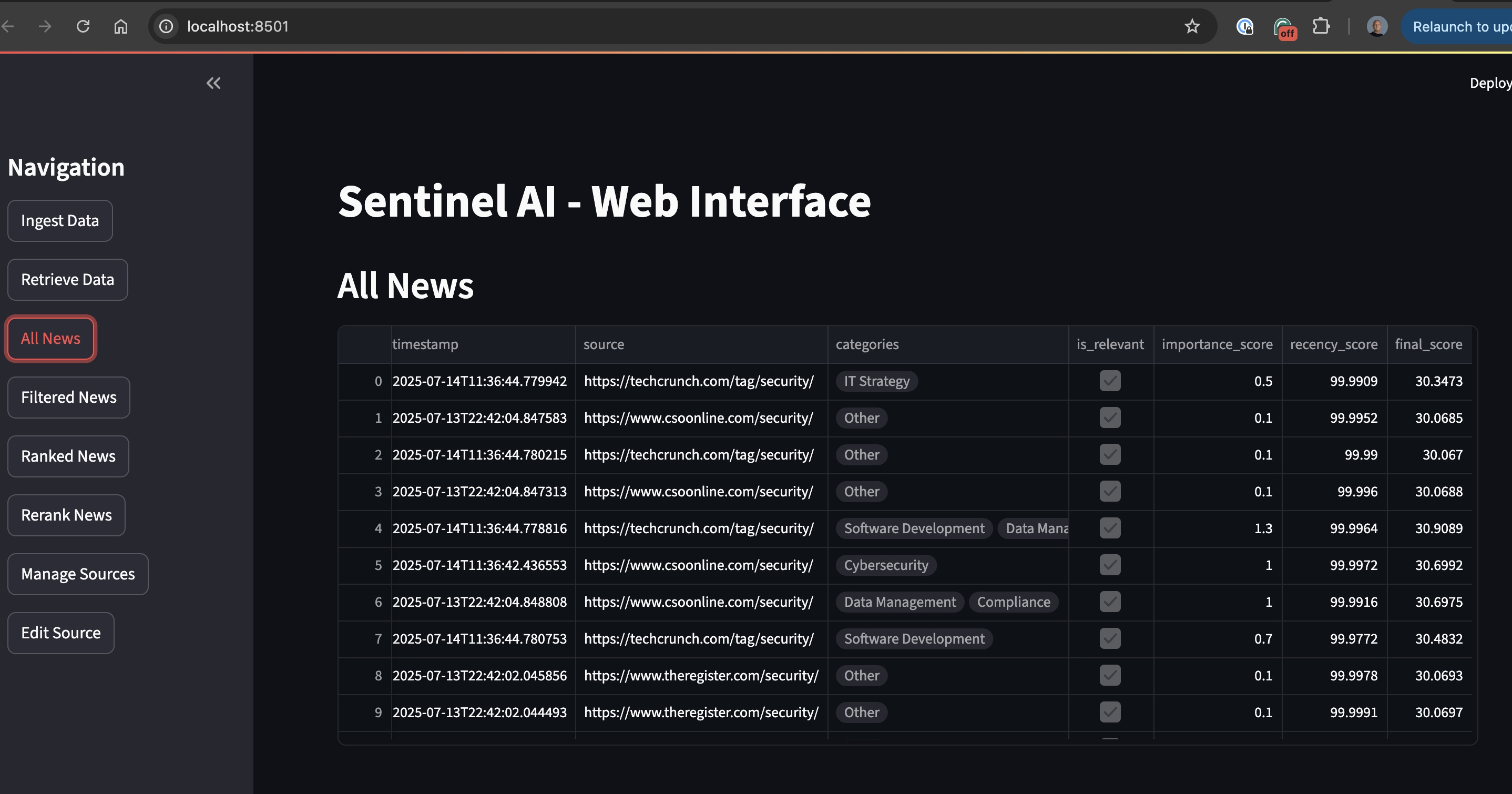

Then hit the Web UI to add your first RSS feed.  _Sentinel-AI UI

_Sentinel-AI UI

And don’t forget to watch services’ log on Portainer to see the magic odf a distributed system send messages to each other and execute their tasks.

Problems or feature ideas? 👉✉️ Open an issue or reach out on [genmind.ch](https://genmind.ch/)!)

Closing Thoughts Sentinel-AI is more than a demo; it’s a template for production-grade, AI-powered event pipelines. If you need real-time insights, bullet-proof reliability, and the freedom to plug in future agentic intelligence, feel free to fork, extend, and deploy.

Happy hacking! 💡🚀

Need Help with Your AI Project?

Whether you’re building a new AI solution or scaling an existing one, I can help. Book a free consultation to discuss your project.