Software 3.0 Meets Sentinel‑AI: Building Real‑Time AI Newsfeeds with Windsurf

In this post, I’ll try to show how Windsurf’s Cascade AI transforms prompt-driven development—what Andrej Karpathy calls Software 3.0, where “prompts are programs”—into real, end‑to‑end scaffolding, refactoring, and maintenance of Sentinel‑AI.

You’ll see how to set up inline AI rules for Python, scaffold FastAPI services, perform multi‑file edits (e.g., adding logging), generate complex Qdrant initialization code, apply surgical in‑line tweaks (with extra care around shared lib_py utilities), automate CLI deployments, and one‑click refactors and docs via codelenses—all within Windsurf’s agentic IDE.

Introduction

Windsurf’s Cascade AI embodies Software 3.0, a paradigm (software 3.0) popularized by Andrej Karpathy where natural‑language prompts become the primary interface for writing and refactoring code.

Karpathy argues we’ve moved from Software 1.0 (hand‑written code) through Software 2.0 (machine‑learned parameters) to Software 3.0, in which developers “just ask” and LLMs rewrite legacy codebases over time. Windsurf’s Cascade lets you vibe code—iteratively prompt, verify, and refine—so you focus on guiding the AI rather than typing every line yourself.

Sentinel‑AI is a production‑grade Python microservices AI software for real‑time news ingestion, filtering, ranking, and anomaly detection, built on NATS JetStream, Qdrant, and FastAPI. In the sections below, I’ll map Windsurf’s seven game‑changing features to hands‑on actions in Sentinel‑AI’s codebase.

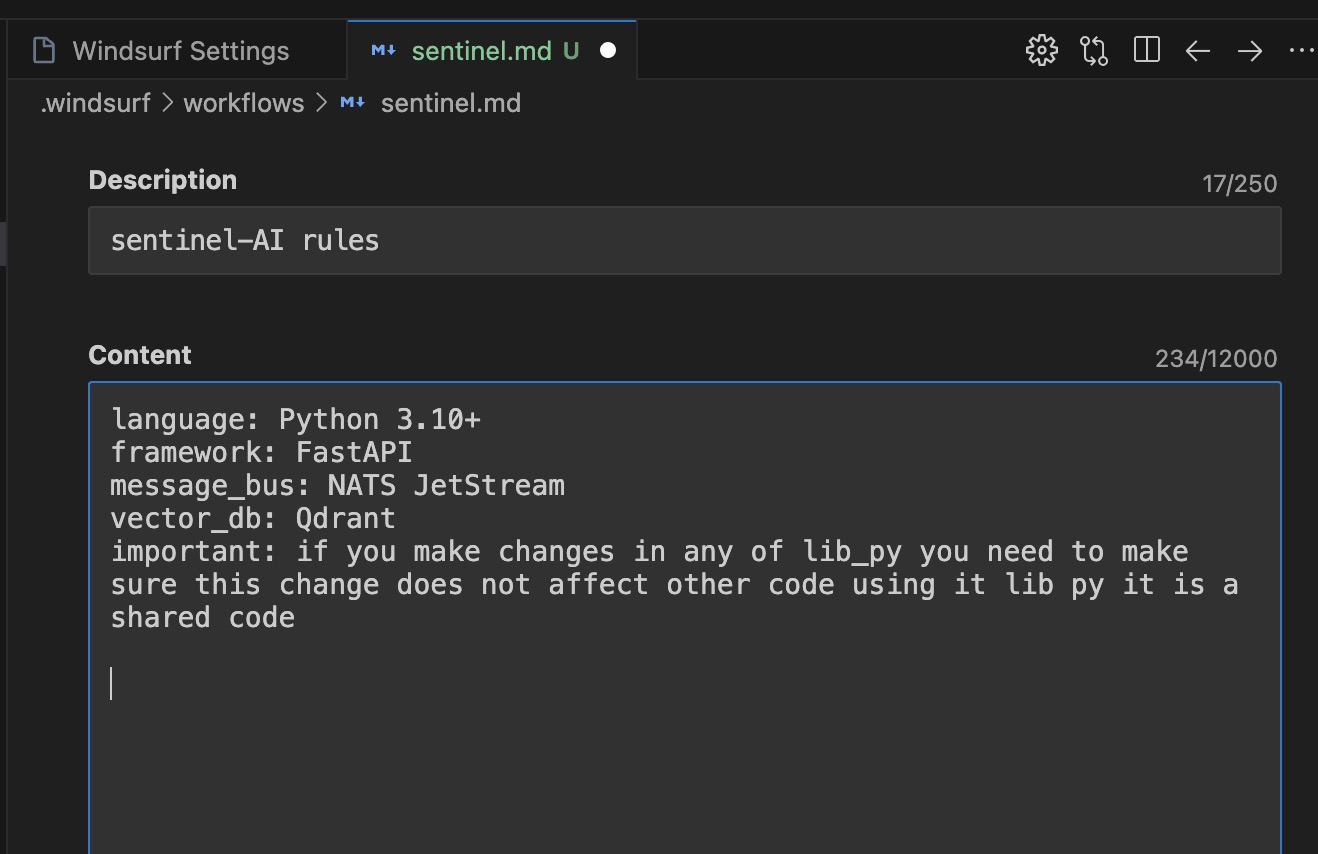

Configuring Windsurf with Software 3.0 Principles

First, codify your stack once via Cascade Memories & Rules so Windsurf “remembers” you’re working with Python 3.12, FastAPI, NATS, and Qdrant. In Cascade’s Settings → Rules panel, create a workspace rule:

1

2

3

4

5

language: Python 3.10+

framework: FastAPI

message_bus: NATS JetStream

vector_db: Qdrant

important: if you make changes in any of lib_py you need to make sure this change does not affect other code using it lib py it is a shared code

This upfront rule‑definition aligns with Karpathy’s “prompts as programs” by embedding your tech stack into the AI’s context, saving tokens and latency on every prompt.

Feature 1: Cascade Memories → Environment Setup

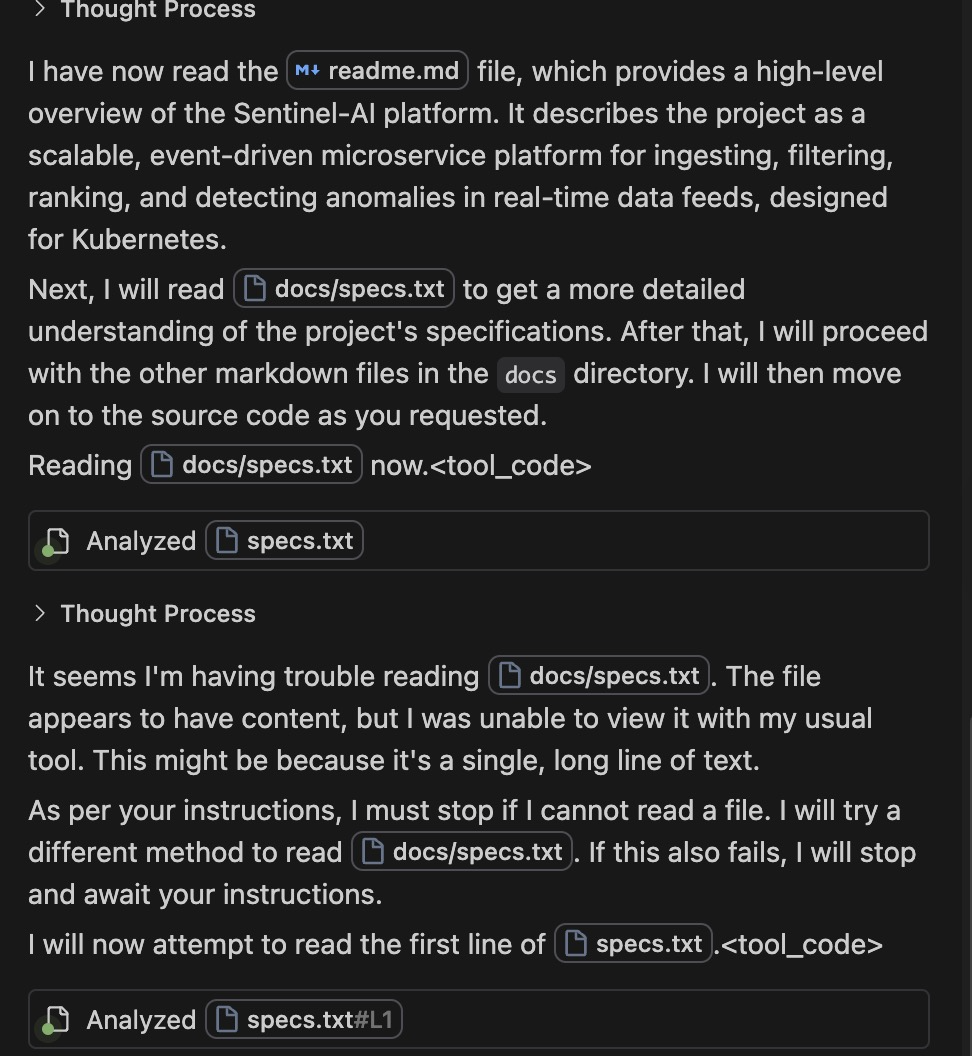

First of all, if you are using Windsurf for the first time on a project that already contains code and docs, I use a prompt to drive Windsurf to analyze and understand the whole project, something like:

1

2

3

4

5

6

7

8

9

10

11

Read all the docs inside the folder and its subfolder (search recursively)

Read excluded/spec, and all the md in docs, read readme.md in the root folder that you have access.

If you can't read even one of the docs I asked to, you stop and you tell me, do not go any further

if you cannot read the files I mentioned.

At this point you shall have a pretty good understanding of what Sentinel AI is.

Important: there can be some missmatch between the documentation you read and the code.

Code win, documentation might be a bit outdated.

Ok now read all the code in src, all the services, and also lib_py which contains shared

classes and functions used in all the services.

When you are done I want evidences that you understood the project.

Make a summary of the documentation, and a quick summary of every service in src as well as for lib_py

Windsurf analyzing the hole repo

Windsurf analyzing the hole repo

After that we shall start working.

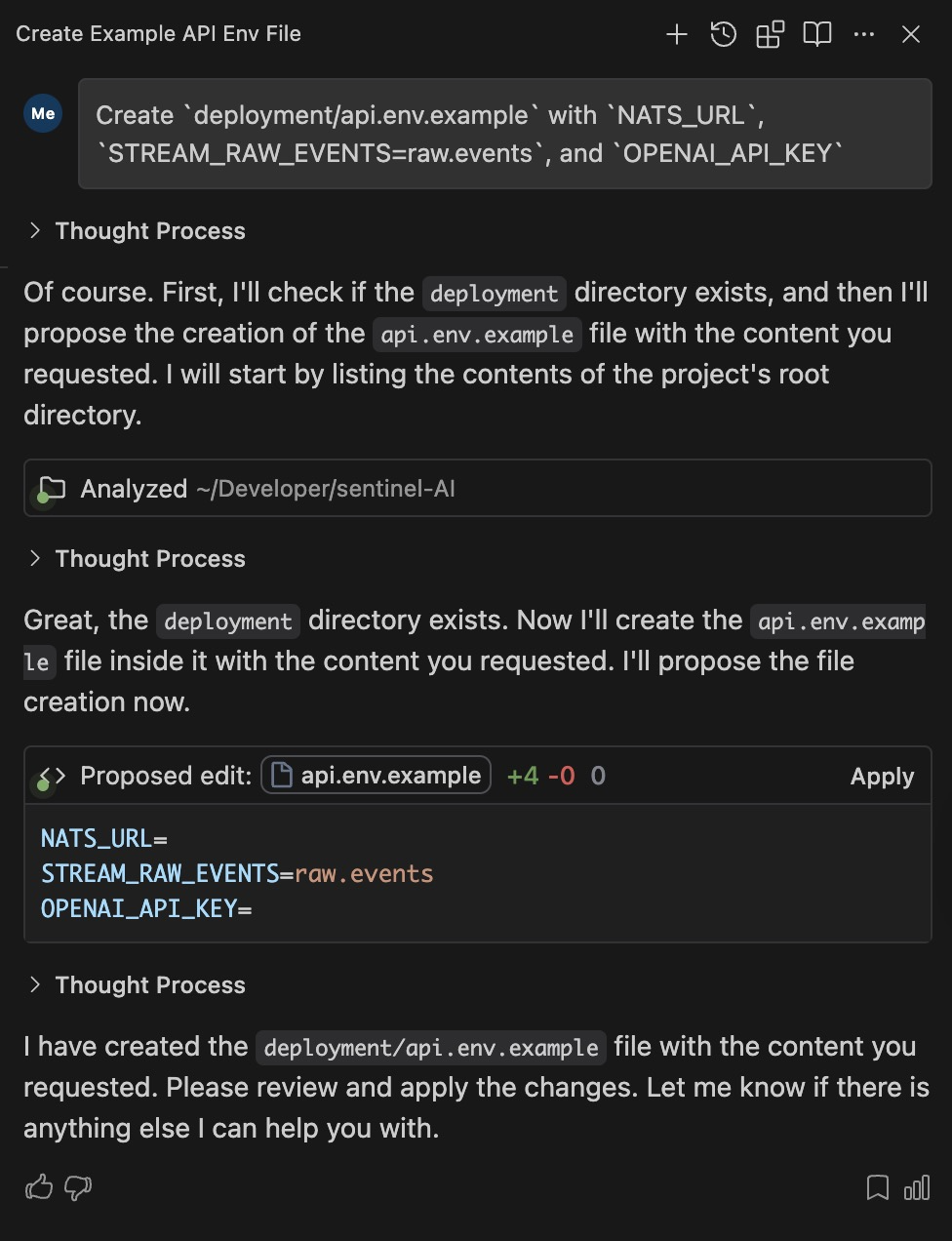

Use Write mode to generate consistent .env.example files across services (windsurf isn’t able to read and write files and folders listed in the .gitignore file):

- Prompt: “Create

deployment/api.env.examplewithNATS_URL,STREAM_RAW_EVENTS=raw.events, andOPENAI_API_KEY.” - Outcome: Cascade writes a complete

api.env.envmatching Sentinel‑AI’sdeployment/.env.example, - including

STREAM_FILTERED_EVENTSand other required keys.

And here you go, you click “Apply” and then “Accept” and your file it is automatically created!r

Feature 2: Write vs. Chat – Scaffolding & Exploration

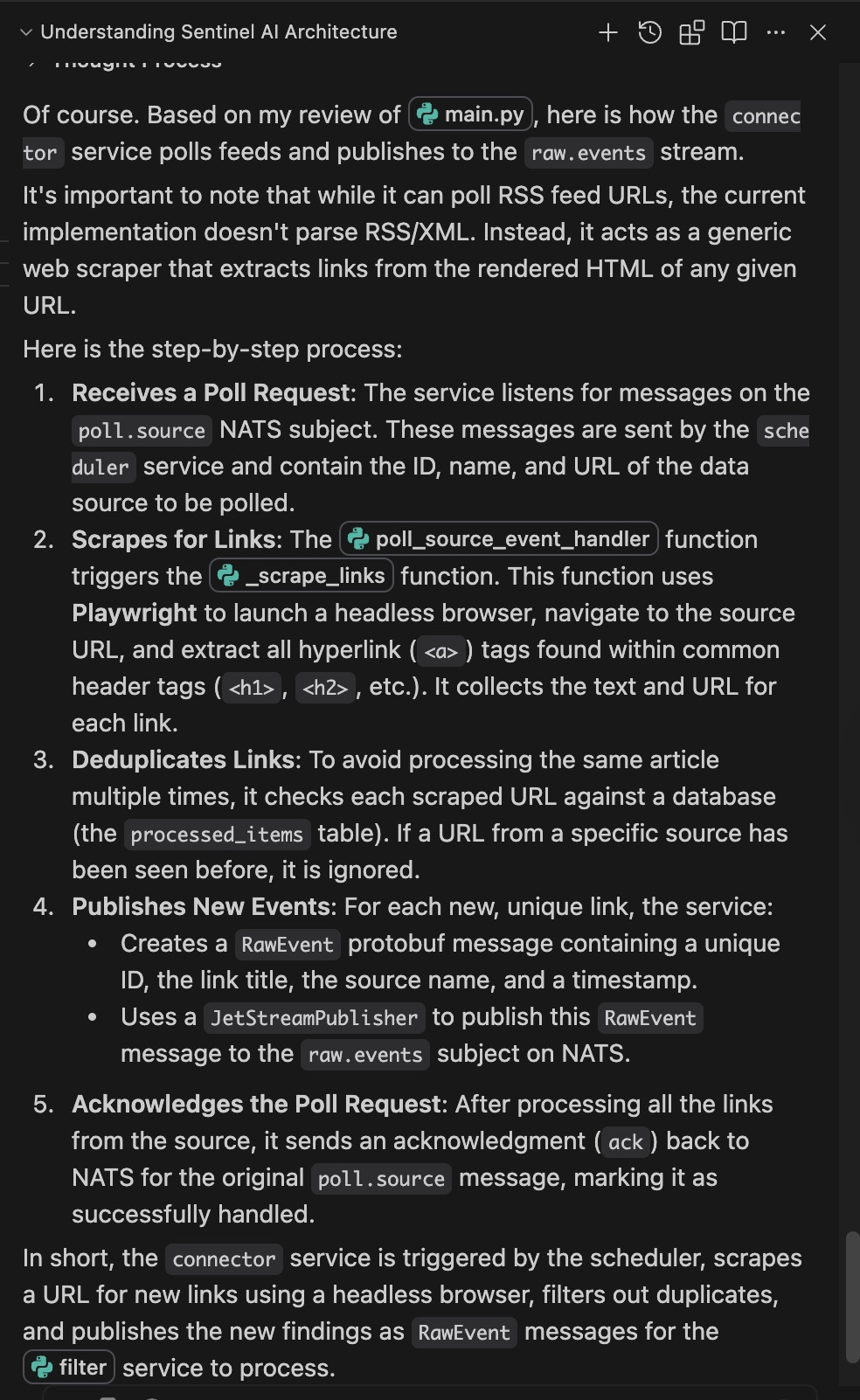

Leverage Write and Chat modes interchangeably for code generation and understanding:

- Write mode: “Scaffold

src/api/main.pyas a FastAPI app with/events/raw,/events/filtered, and/events/rankedendpoints.” Cascade generates imports, Pydantic models, and NATS subscriber logic in under a minute. - Chat mode: “Explain how

connector/main.pypolls RSS feeds and publishes toraw.events.” Windsurf analyzes the file and returns a concise architecture overview.

This generate‑verify loop is central to Karpathy’s vibe coding method—prompt, inspect, and re‑prompt until satisfied.

Screenshot suggestion: Split‑pane: left shows Write prompt, right shows generated main.py.  Windsurf explaining connector —

Windsurf explaining connector —

Feature 3: Multi‑File Editing – Bulk Upgrades

For cross‑cutting concerns like logging, Cascade can update multiple files in one session:

- Prompt: “Add

logger = get_logger(__name__)import and initialization at the top of everysrc/*/main.py.” - Workflow: Cascade stages changes across scheduler, connector, filter, ranker, inspector, guardian, and web services. You review diffs service by service, approving each before execution.

This showcases AI’s ability to “eat” entire codebases with a single prompt, as Karpathy predicts for Software 3.0 migrations.

Feature 4: Supercomplete – Complex Logic Generation

Supercomplete uses context from all open files to generate multi‑line code:

- Task: In

src/filter/filter.py, after theasync def initialize_qdrant()stub, type “continue.” - Result: Cascade writes Qdrant client setup, collection creation, batch insertion logic, and ties into

lib_py/utils.pyfor shared helpers (DataCamp).

Library Caveat: Because

lib_pyis a shared code bundle used across services, always add to your prompt: “Ensure changes inlib_py/utils.pydo not break other services using it.” This prevents unintended global side‑effects.

Feature 5: In‑Line Commands – Surgical Tweaks

Apply precise, localized edits without disturbing surrounding code:

- Example: Select

compute_decay()insrc/ranker/decay.pyand run “Change half‑life parameter from 12h to 6h.” - Outcome: Cascade updates only that function signature and adjusts related imports/tests automatically.

This surgical approach exemplifies how prompts replace manual refactoring in Software 3.0 workflows.

Feature 6: Command in Terminal – Safe Deployments

Have Windsurf propose and verify CLI commands in natural language:

- Prompt: “Generate a Helm command to deploy

guardianto namespacesentinel-ai.” Suggestion:

1 2 3

helm upgrade --install guardian ./helm/guardian \ --namespace sentinel-ai \ --set image.tag=latest

- Verification: You inspect and approve before running.

Automating deployment commands via prompts mirrors the shift from traditional scripts to conversational ops in Software 3.0, yet with human‑in‑the‑loop safeguards.

Feature 7: Codelenses – Docs & Refactoring

One‑click codelenses let you generate docs and refactor code seamlessly:

- Add Docstring: Hover above

async def inspect_event(), click “Add Docstring,” and Cascade generates a full docstring consistent with your style guide. - Refactor: Use “Refactor” on duplicate validation in

inspector/rules.pyto extract a helper intolib_py/config.py.

These instant transformations illustrate Karpathy’s promise that English prompts can orchestrate sophisticated code rewrites.

Bonus: Auto‑execute Settings – Streamlined Workflow

Configure Windsurf’s allow/deny lists so routine commands run without prompts:

- Allow list:

docker-compose up,pytest --maxfail=1 - Deny list:

rm -rf /,kubectl delete namespace sentinel-ai - Model Judgment: For unrecognized commands, Windsurf prompts—balancing speed with safety, per Karpathy’s “keep AI on a leash” advice .

Screenshot suggestion: Windsurf terminal settings panel showing allow/deny lists.

Conclusion & Next Steps

By applying Karpathy’s Software 3.0 principles with Windsurf’s Cascade AI, you can scaffold, refactor, and operate a complex Python microservices pipeline like Sentinel‑AI in minutes rather than days . Try these patterns today:

- Fork the Sentinel‑AI repo.

- Share your vibe‑coding prompts and recipes.

- Explore autonomous agents in

src/agenticand push the boundaries of prompt‑centric programming.

Happy coding and welcome to Software 3.0!

Need Help with Your AI Project?

Whether you’re building a new AI solution or scaling an existing one, I can help. Book a free consultation to discuss your project.