AI Image Detection: More Robust Than You Think

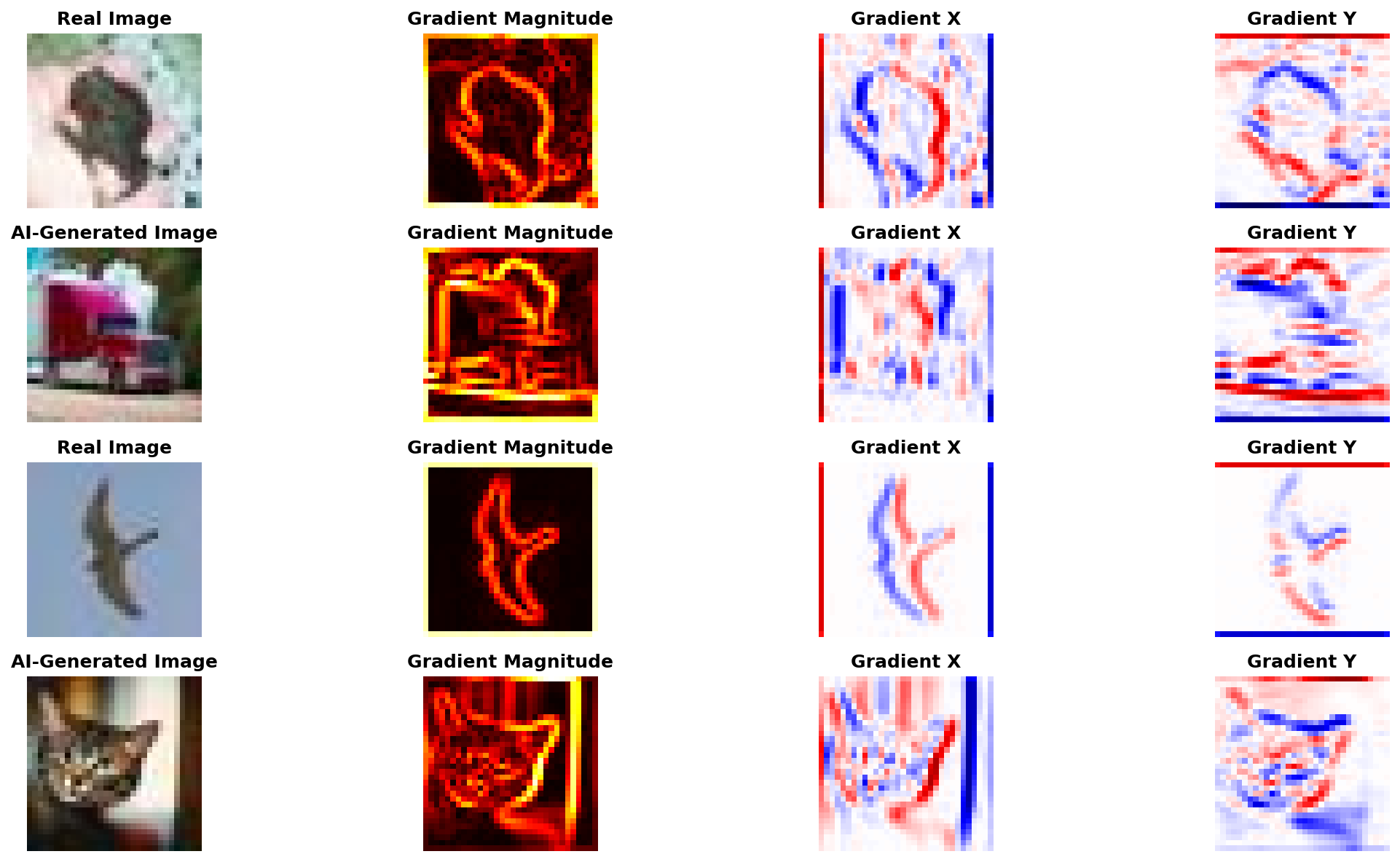

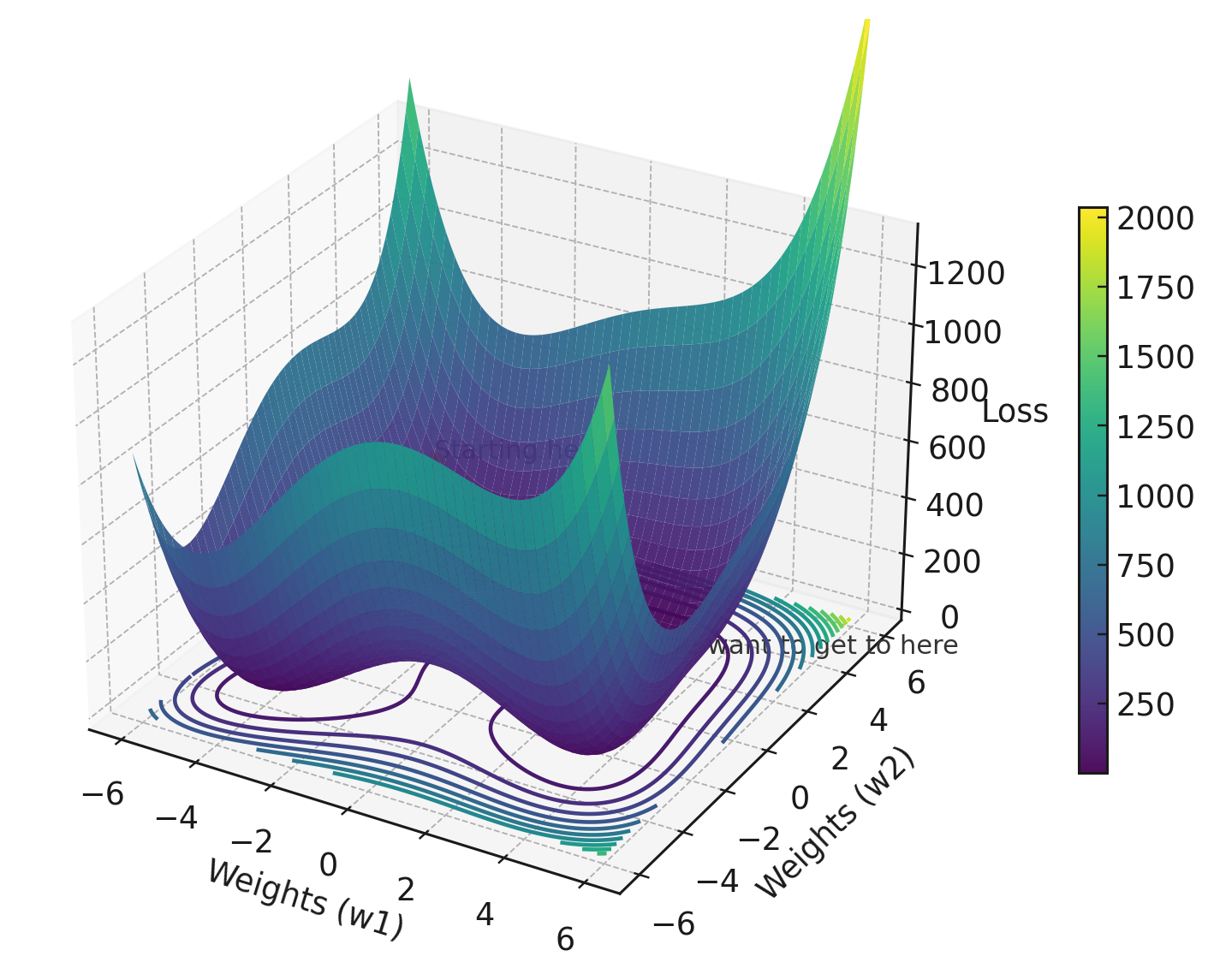

I keep seeing posts claiming: “AI image detection completely fails in the real world. JPEG compression and resizing destroy all detection signals. These methods only work in labs with pristine imag...

I keep seeing posts claiming: “AI image detection completely fails in the real world. JPEG compression and resizing destroy all detection signals. These methods only work in labs with pristine imag...

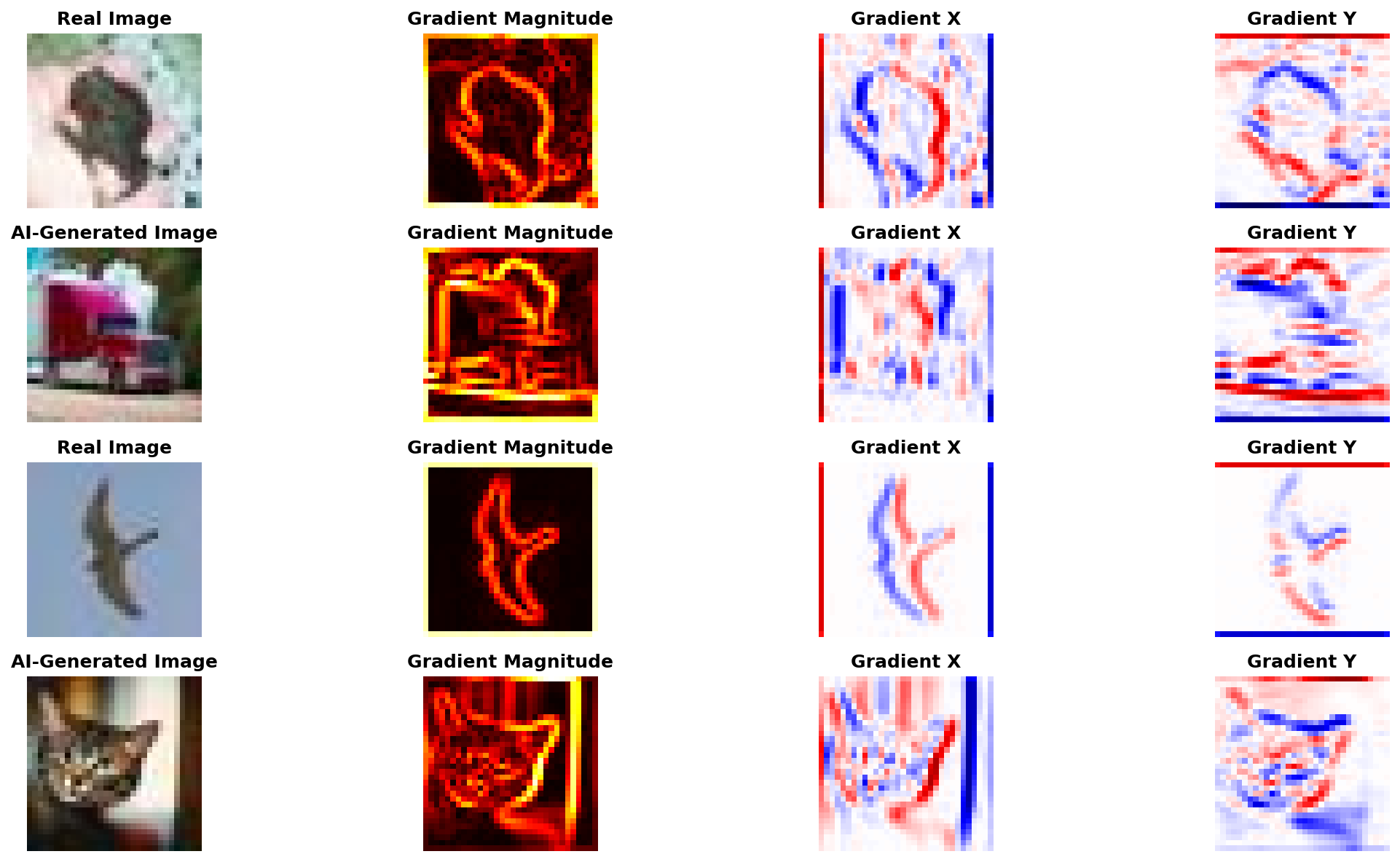

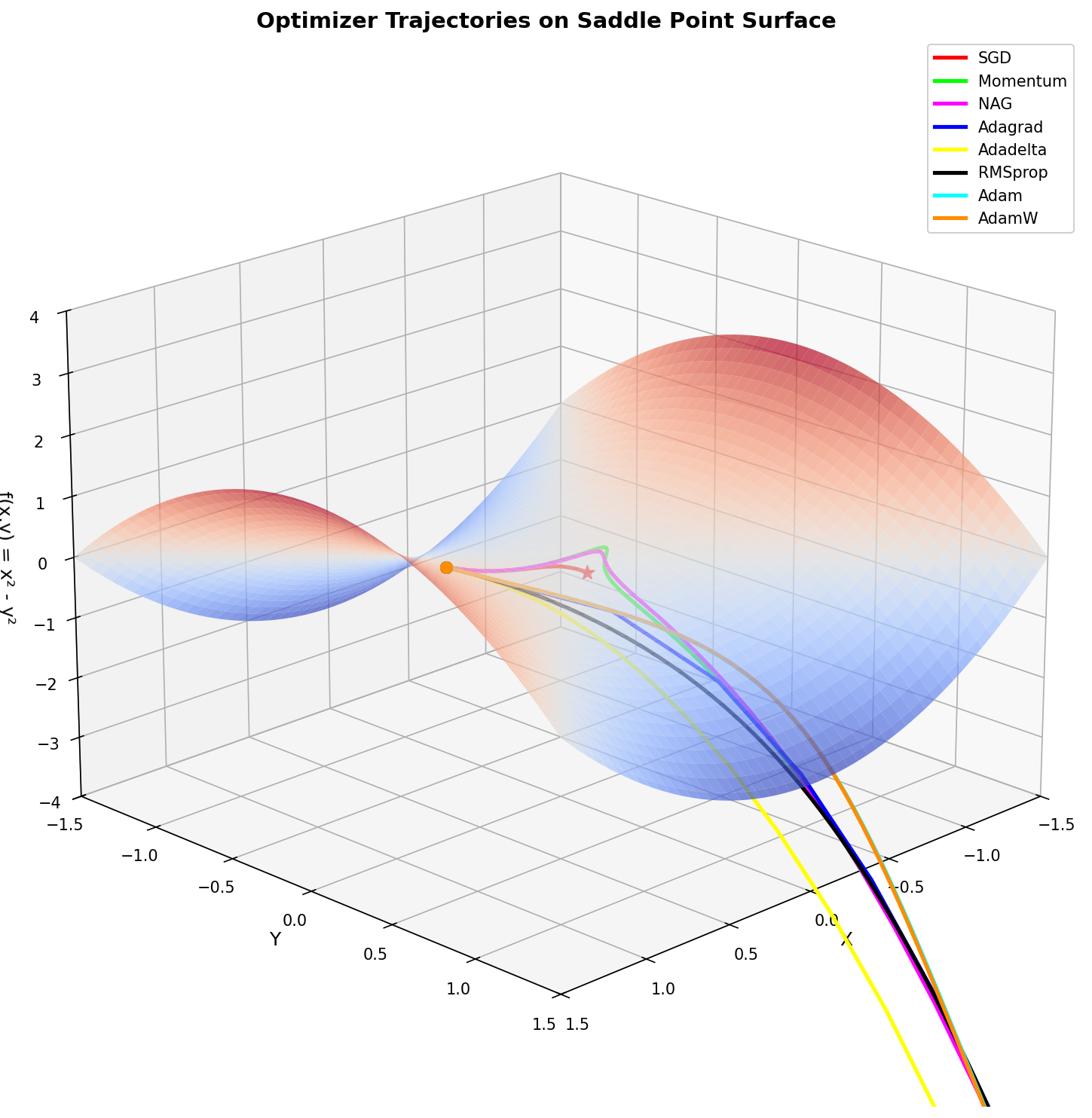

If the loss function tells your model what to minimize, the optimizer tells it how to get there. Pick the wrong optimizer and your model might converge slowly, get stuck in bad minima, or blow up ...

Choosing the right loss function is one of those decisions that can make or break your model. Get it wrong, and your network might struggle to learn anything useful. Get it right, and training beco...

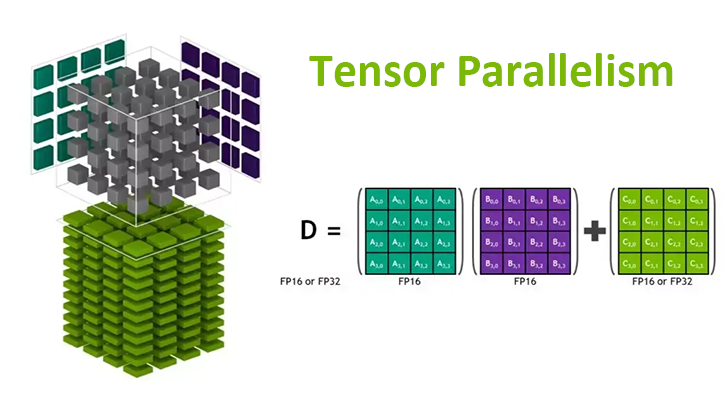

Running a 70B parameter model on a single GPU? Not happening. Even the beefiest H100 with 80GB of VRAM can’t hold Llama-2-70B in full precision. This is where Tensor Parallelism (TP) comes in — it...

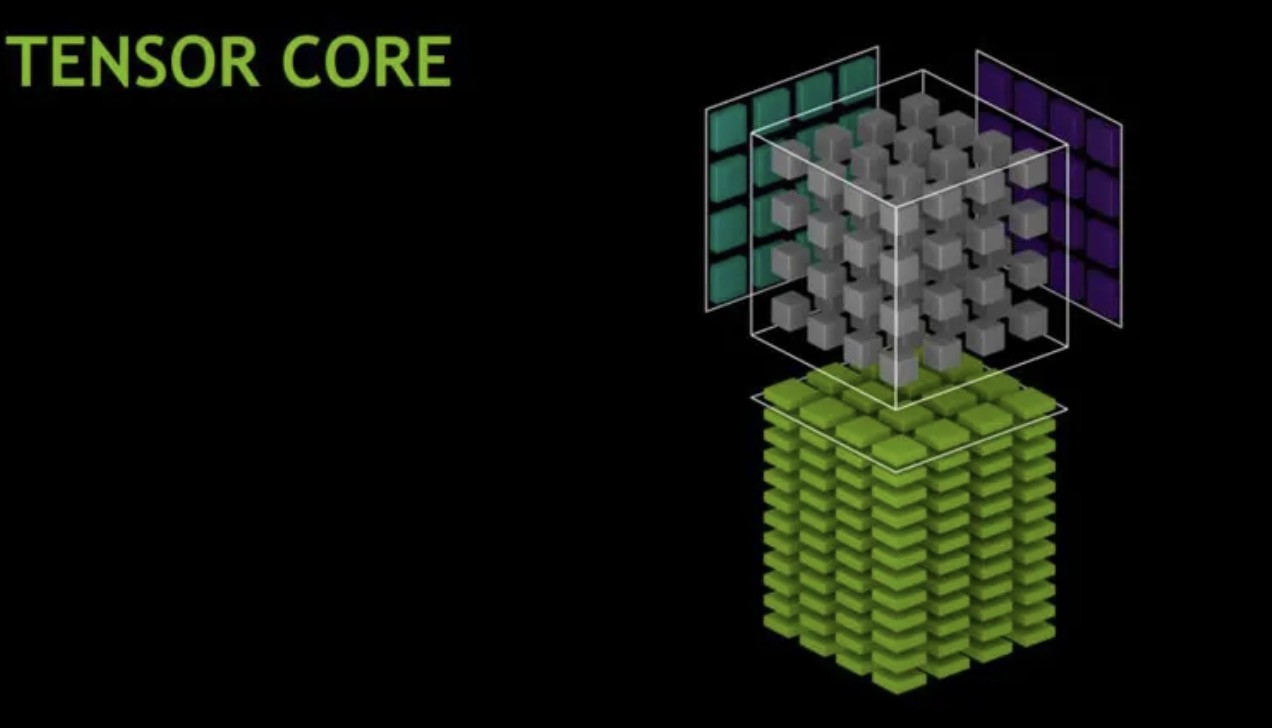

Understanding why modern AI is so fast requires understanding the hardware that powers it. This is a companion post to What Is a Tensor? A Practical Guide for AI Engineers, focusing specifically...

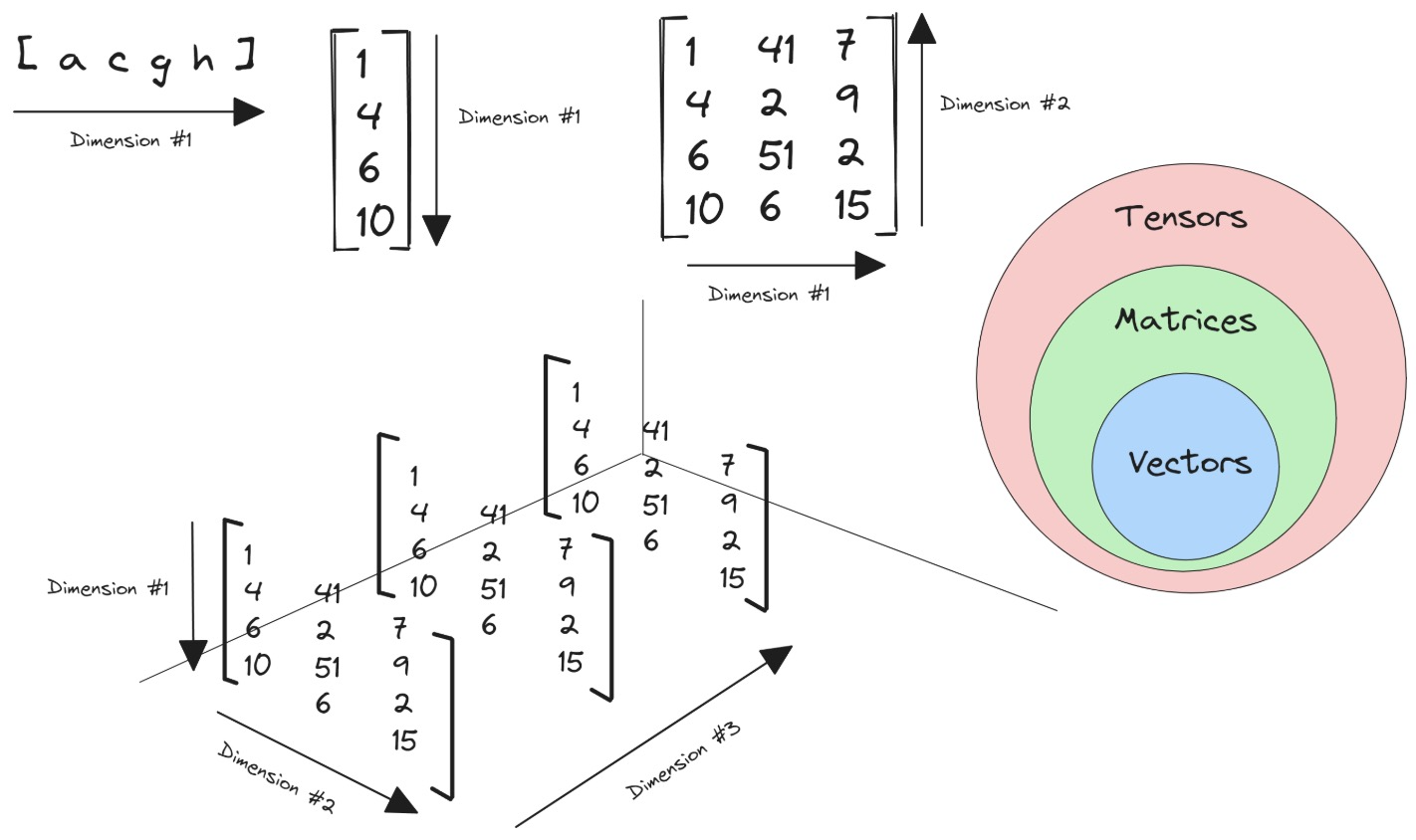

When dealing with deep learning, we quickly encounter the word tensor. But what exactly is a tensor? Is it just a fancy name for an array? A mathematical object from physics? Both? This guide take...

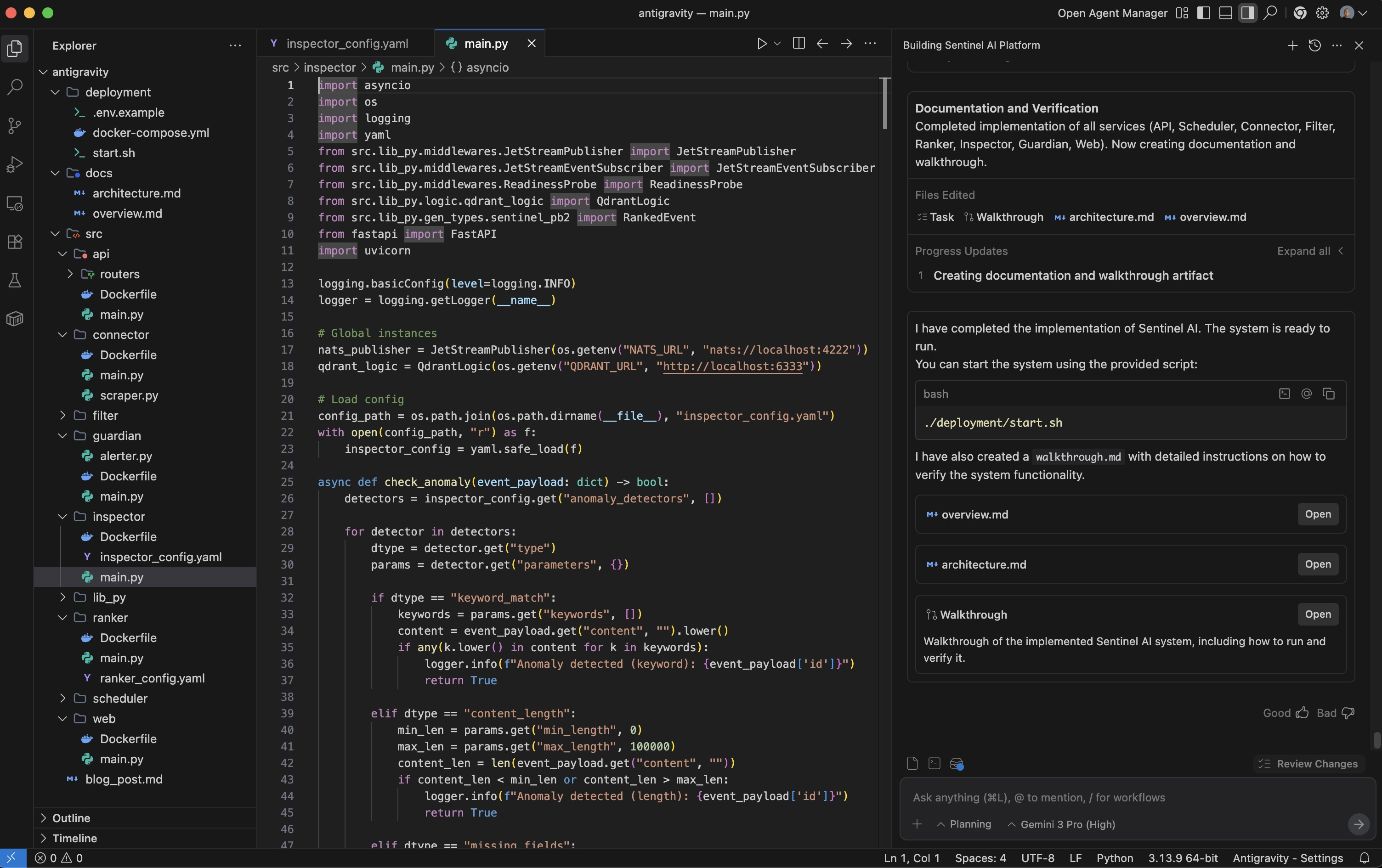

Everyone is talking about Google Antigravity. If you’ve seen the recent YouTube reviews, you know the hype is real. They’re showing off the “vibe coding” capabilities—building React apps with a si...

That is just the case to say: “a picture is worth a thousand words” - but what if a picture could be worth exactly 1,000 text tokens with 97% accuracy? DeepSeek-AI’s latest release, DeepSeek-OCR, ...

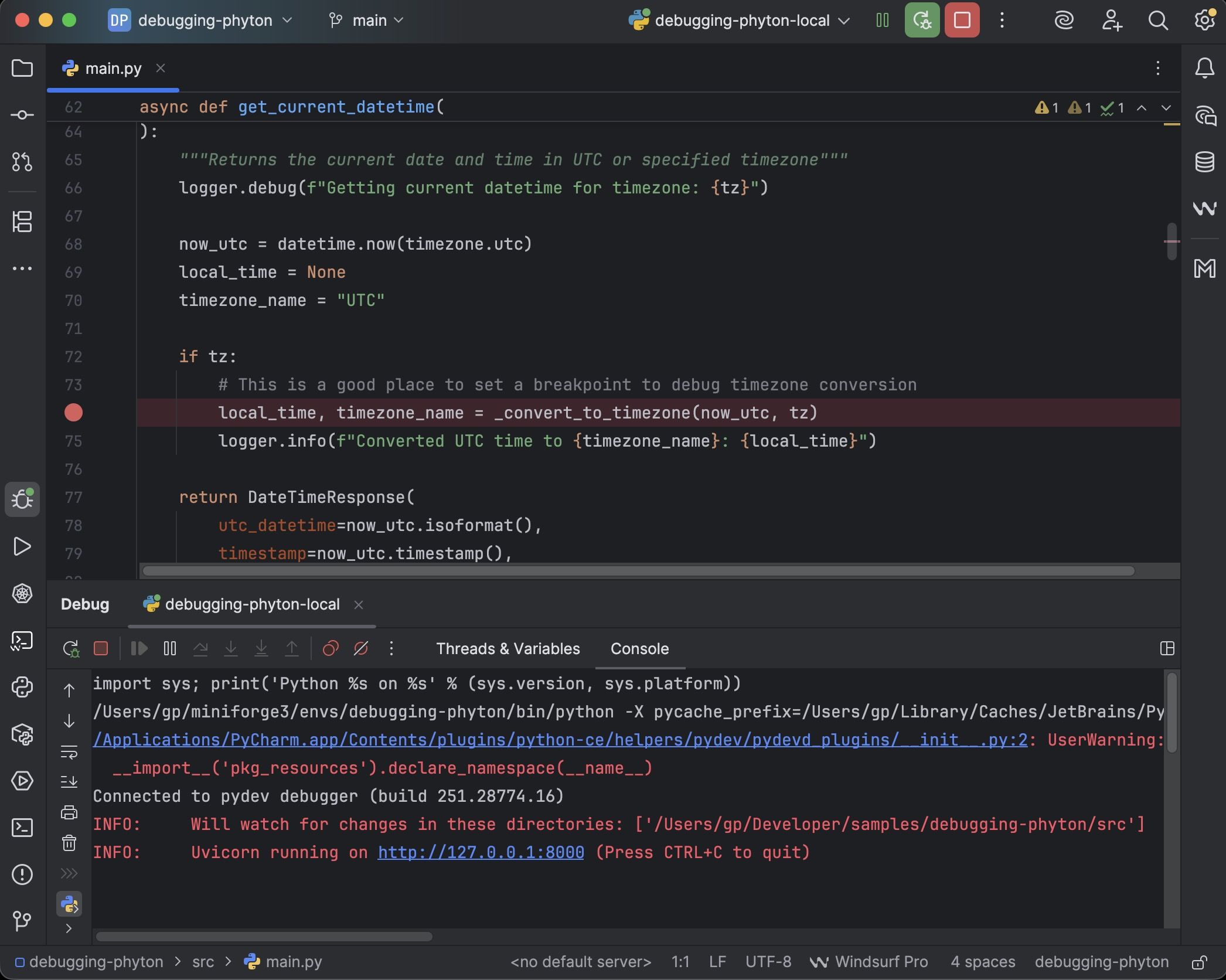

If you’re working with PyCharm, Docker, and Python daily, your debugger is either your best friend or that powerful tool you know exists but haven’t fully mastered. This guide will change that. Th...

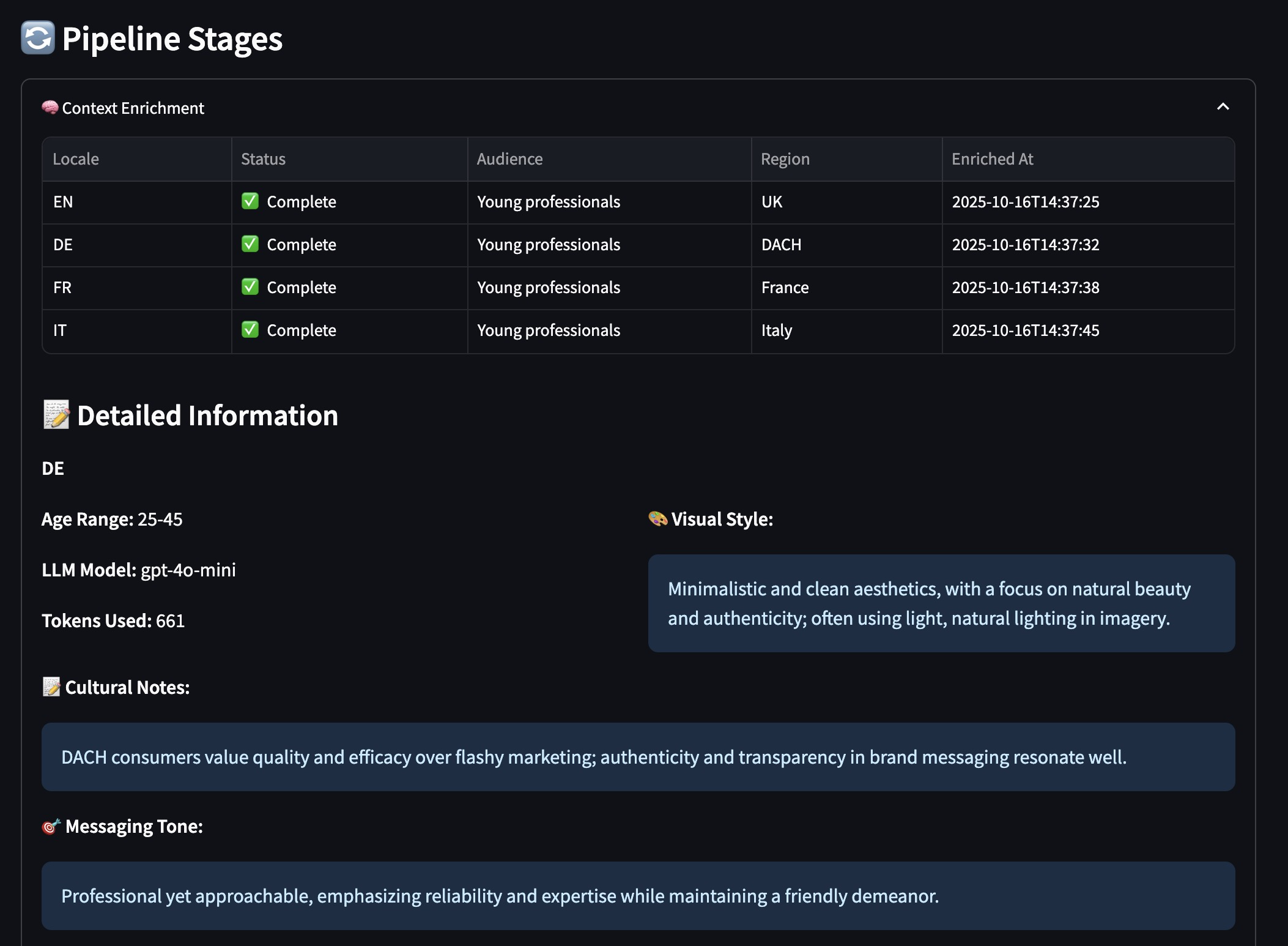

The marketing automation problem hasn’t changed: creative teams spend days generating localized ad variants across markets and formats. A single campaign with 2 products × 4 locales × 4 aspect rati...