Building an AI-Powered Creative Campaign Pipeline: From Brief to Assets in Minutes

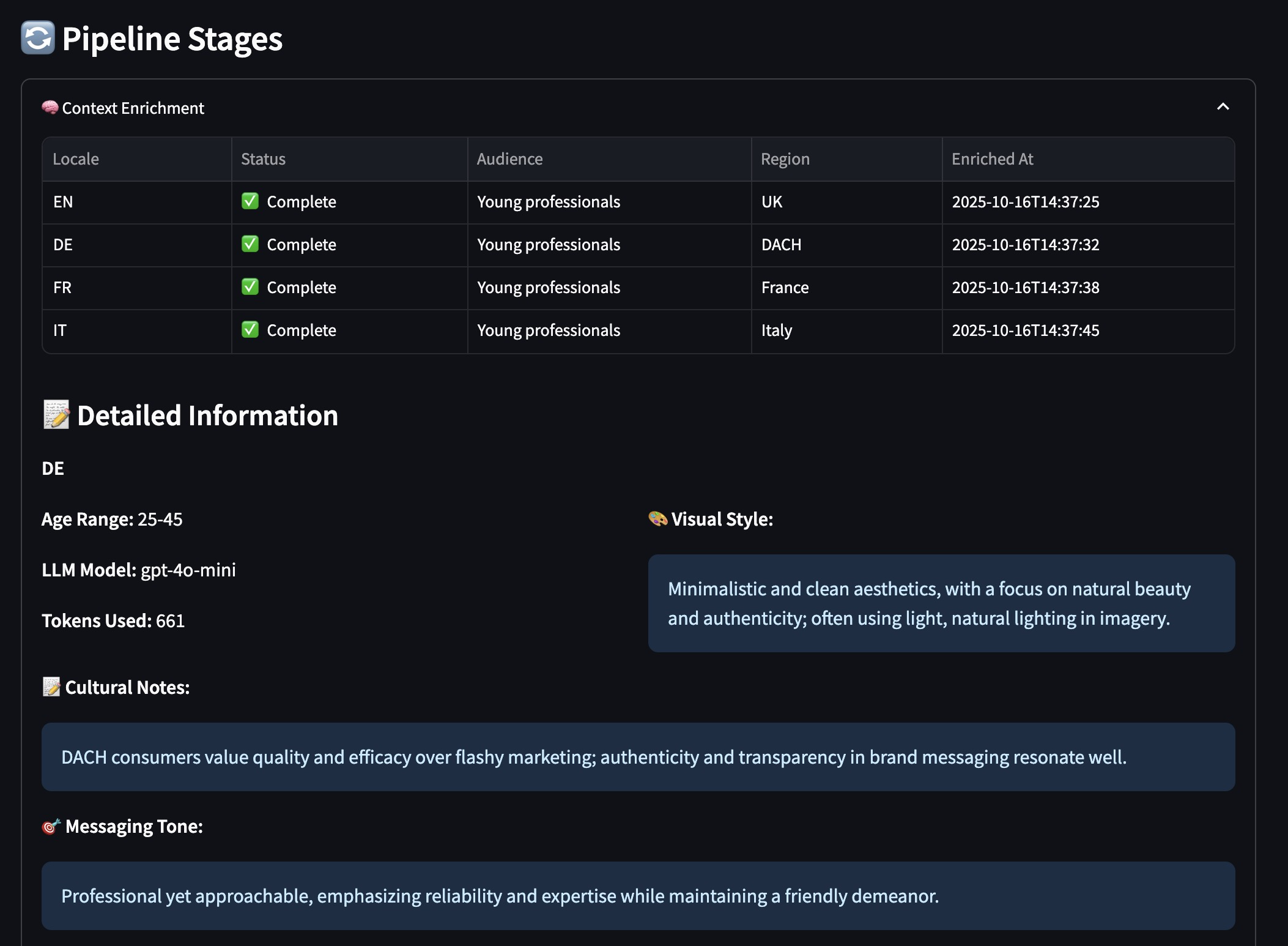

The marketing automation problem hasn’t changed: creative teams spend days generating localized ad variants across markets and formats. A single campaign with 2 products × 4 locales × 4 aspect rati...