Choosing the Right Optimizer for Your Deep Learning Problem

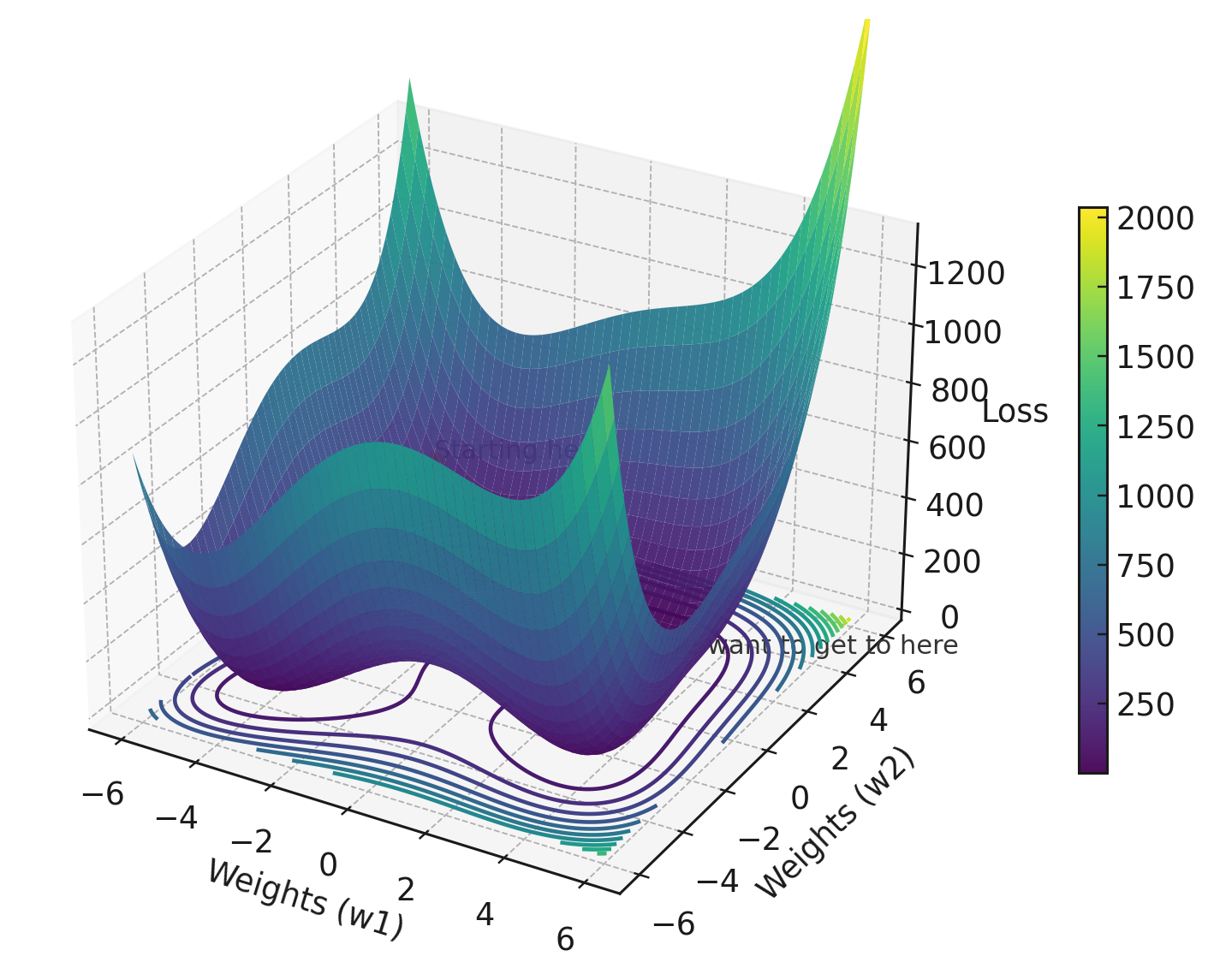

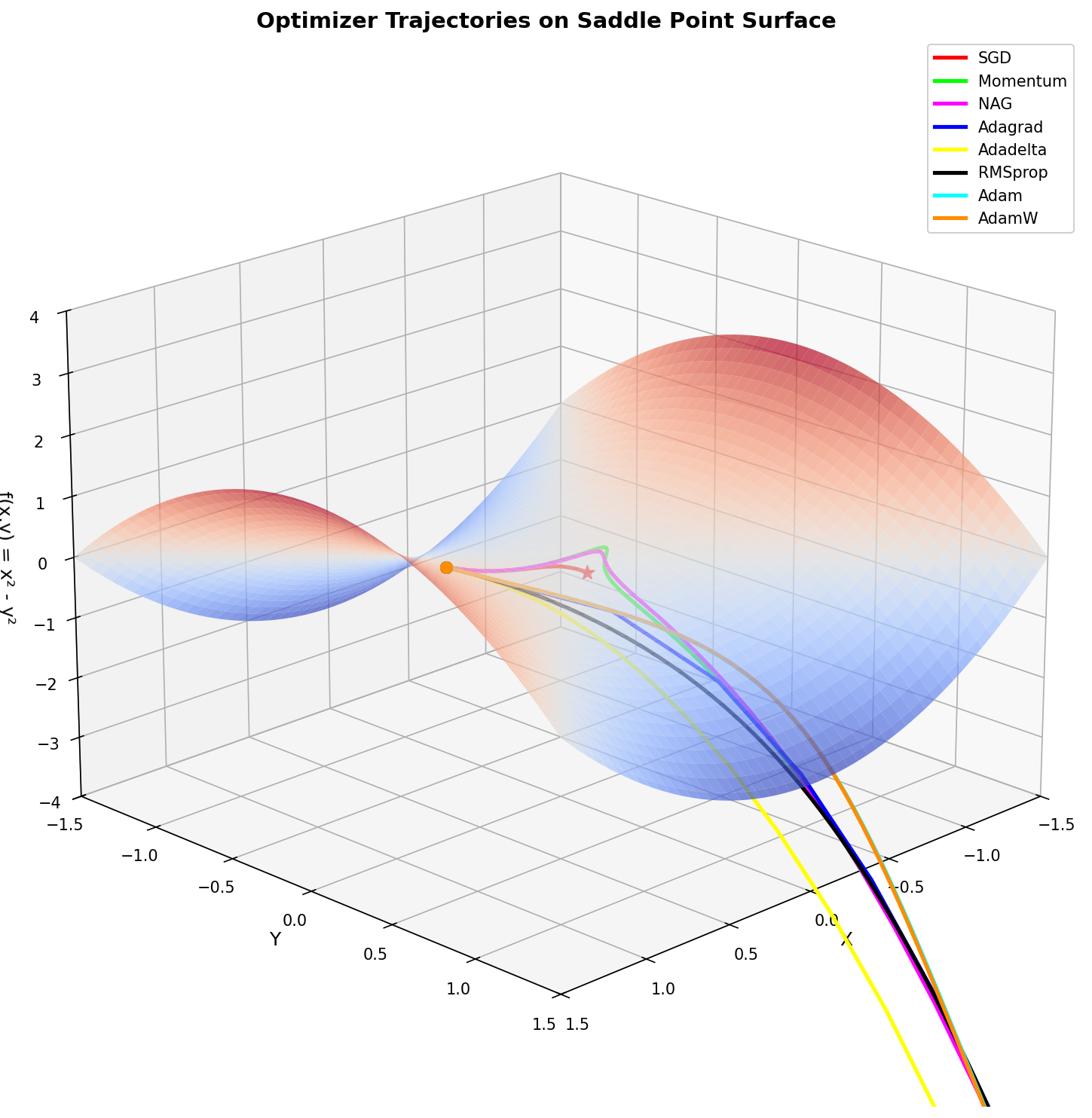

If the loss function tells your model what to minimize, the optimizer tells it how to get there. Pick the wrong optimizer and your model might converge slowly, get stuck in bad minima, or blow up ...