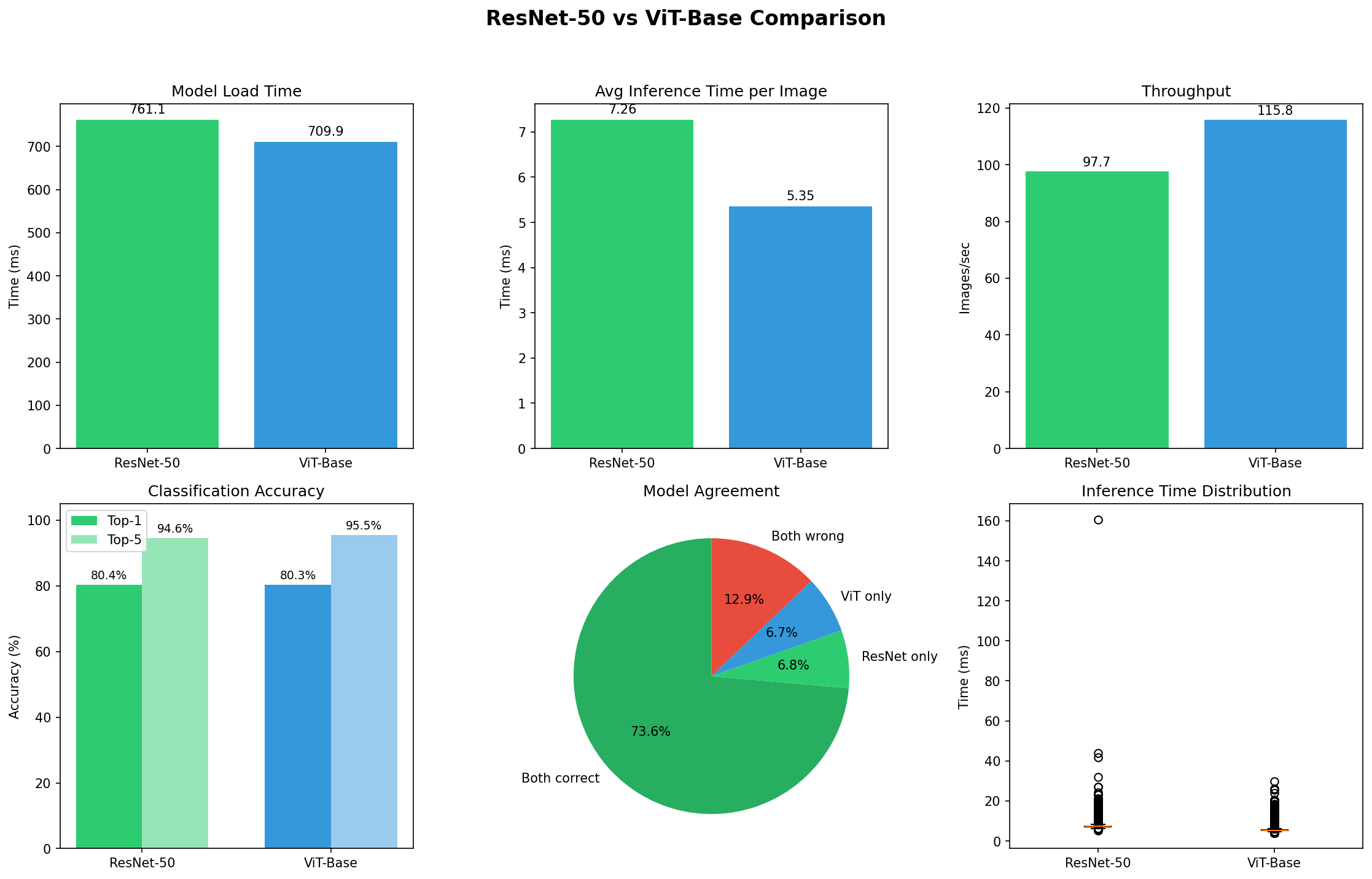

I Benchmarked ResNet vs ViT on 50K Images. They're Nearly Identical.

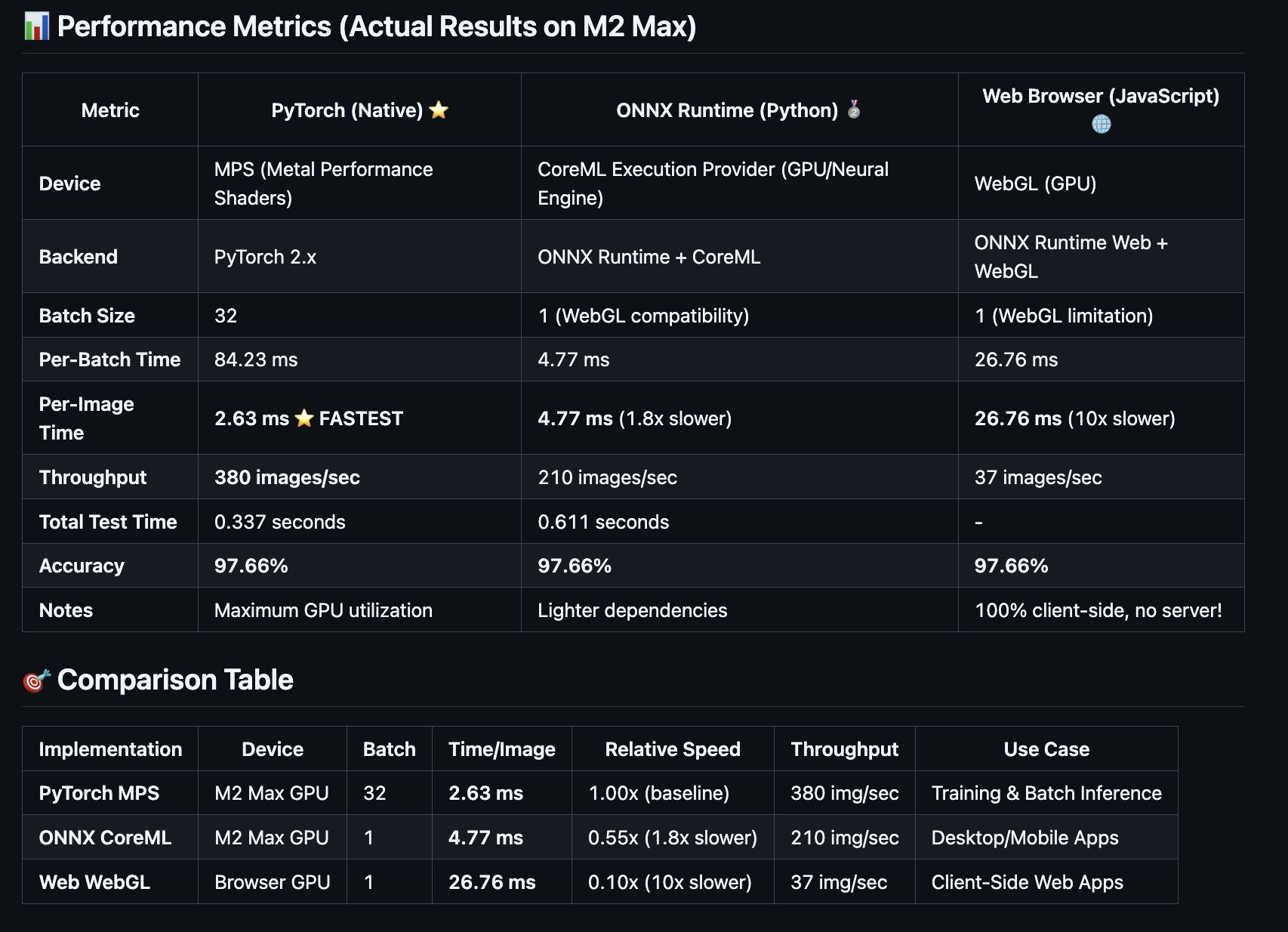

Everyone wants to throw Transformers at every computer vision problem. The research papers show impressive gains. The hype is real. But I wanted to know: What happens when you actually run the sam...