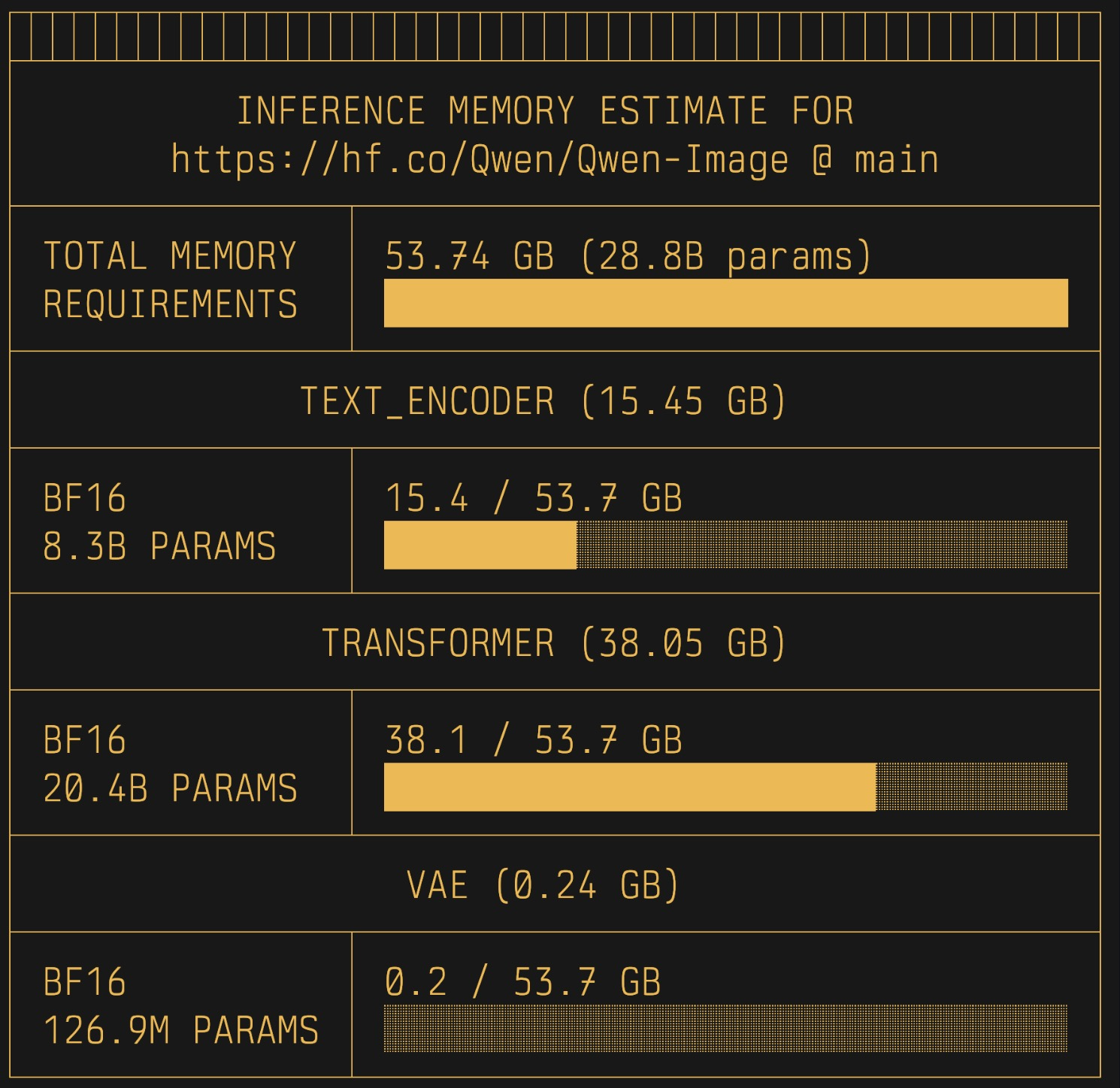

Predict Peak VRAM Before Downloading a Model (Weights + KV Cache + Quantization)

OOM debugging is a waste of time. If a model is on the Hugging Face Hub in Safetensors, you can estimate most of the VRAM it will need before downloading weights — by reading only the metadata hea...